Ur

The Ur module provides real-time tracking of 3D hand poses from a monocular RGB camera feed on mobile phones. Tracking and reconstructing the 3D pose and geometry of multiple hands in interaction is a challenging problem that is highly relevant for several human-computer interaction applications, including AR/VR, robotics, hand gesture control, and sign language recognition. In order to address the inherent depth ambiguities in RGB data, we propose a novel lite CNN network that regresses camera pose, 3D hand-dense mesh (optional), and 3D landmark positions.

The Ur hand tracker supports only iOS (Android support is experimental).

Enable Bitecode will be automatically set to No in XCode project settings. This is required for hand tracker to work.

Landmark

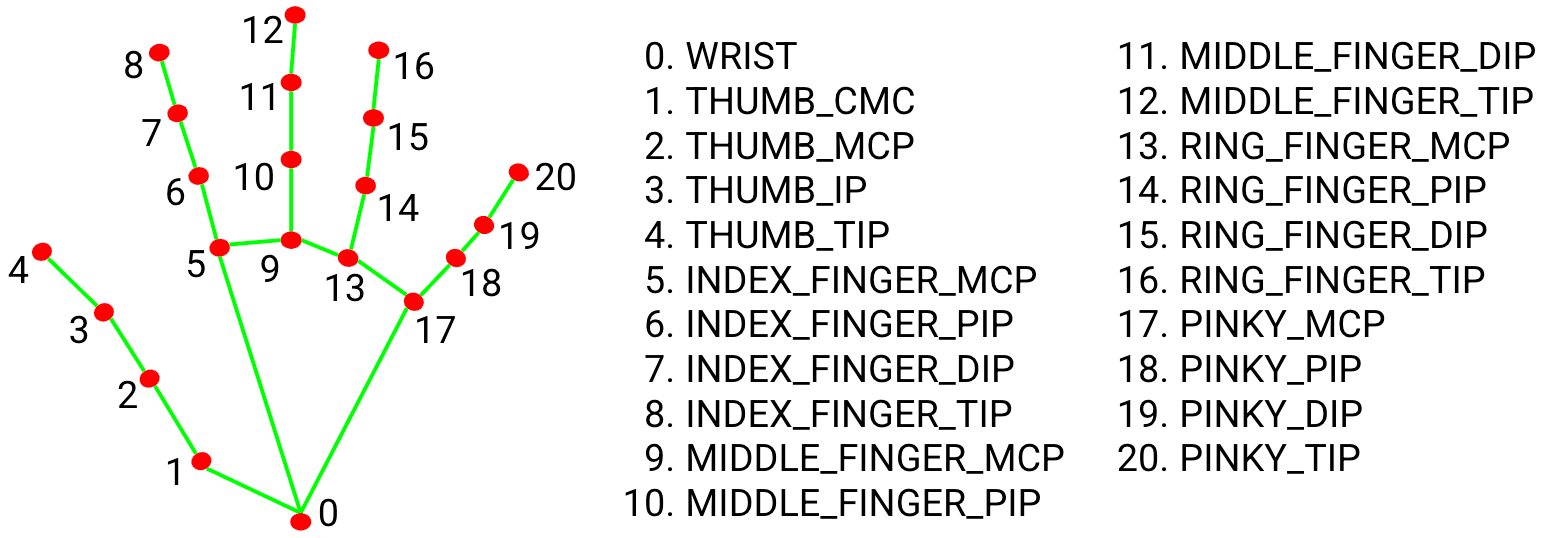

There are 21 landmarks per hand. A landmark is a 3D vector (x,y,z) that holds the local translation for each hand bone joint.

The root landmark defines the palm of the hand joint, and another 20 joints define the tree-like layout of all the hand/finger joints.

Translation

For each hand, there is a translation, an additional 3D vector (x,y,z), which contains the global translation of the hand in camera space, which all hand landmarks should be displaced with to get the landmarks in AR camera space.

Basic Usage

Import the Ur module

using Auki.Ur;

Start the HandTracker

HandTracker _handTracker;

HandTracker is a singleton. You can get the instance with:

_handTracker = HandTracker.GetInstance();

In order to work, the hand tracker requires access to the currently running ARSession, Camera, and ARRaycastManager

_handTracker.SetARSystem(arSession, arCamera, arRaycastManager);

Now the only left to do is to start the hand tracker:

_handTracker.Start();

If all goes well, this method will return true, and the hand tracker will start processing frames and detect hand landmarks.

Stop the HandTracker

It is advised to stop the Handtracker when the application doesn't actively use it to save battery and CPU. Whenever you want to stop the hand tracker, you call:

_handTracker.Stop();

Displaying a helper hand mesh

It is possible to verify that everything is working by showing/hiding the helper hand mesh:

_handTracker.ShowHandMesh();

_handTracker.HideHandMesh();

Accessing the hand landmarks

To access the current hand landmarks directly, we can call the following methods of HandTracker class:

float[] landmarks = _handTracker.Get3DLandmarks();

float[] translations = _handTracker.GetTranslations();

We can also register a call back that is called whenever new landmarks are detected. This is the recommended way to access the landmarks:

_handTracker.OnUpdate += (landmarks, translations, isRightHand, score) =>

{

// landmarks processing code:

};

Calibration Process

Problem

The hand tracker is projecting the hand landmarks into the wrong depth range. To fix the error in the landmark's depth, a calibration process is required that calculates a per-user "hand scale" constant.

Proposed Process

In a valid and stable AR session, ask the user to place their hand on a flat surface plane (like a table) and press a button (to inform the hand tracker that it can start the calibration process).

During the process, we should display some feedback that the hand tracker is "operating"...

A plane ray-casting is performed to determine the distance from the camera to the plane on which the user has laid the hand. Ask the user to hold the camera above the hand and slowly move it up and down until the calibration is finished.

The difference between the reported center of the hand and the distance from the camera to the plane should suffice to calculate a

handScalevalue that satisfies the following:

The zScale is dependent on the "hand size" constant plus a small constant that varies according to the distance to the camera (as found out in the technical report, the distance to the camera is also contributing to the error in the following way: the further away from the camera the greater the error in reported depth).

_handTracker.StartCalibration(report =>

{

switch (report.StatusReport)

{

case HandTracker.CalibrationStatusReport.CALIBRATION_FINISHED:

{

Debug.Log("Calibration success");

break;

}

case HandTracker.CalibrationStatusReport.CALIBRATION_PROGRESS:

{

Debug.Log(report.Progress);

break;

}

case HandTracker.CalibrationStatusReport.CALIBRATION_AR_NOT_READY:

{

Debug.Log("Scan the room");

break;

}

case HandTracker.CalibrationStatusReport.FAILURE_NO_HAND:

case HandTracker.CalibrationStatusReport.FAILURE_NO_PLANE:

case HandTracker.CalibrationStatusReport.FAILURE_NO_MEASUREMENTS:

{

Debug.Log("Calibration failed");

break;

}

}

}, false);

Post-calibration

The resulting "hand scale" value from the calibration process should be valid for the same user across sessions. Therefore, we should offer a mechanism to store this value somewhere, so the user does not need to re-calibrate every time they use the hand tracker...

var state = _handTracker.GetCalibrationState();

PlayerPrefs.SetFloat(HandSizeKey, state.HandSize);

PlayerPrefs.SetFloat(ZScaleKey, state.ZScale);

PlayerPrefs.Save();

_handTracker.SetCalibrateState(new HandTracker.CalibrationState()

{

HandSize = PlayerPrefs.GetFloat(HandSizeKey),

ZScale = PlayerPrefs.GetFloat(ZScaleKey)

});